Exploring AI Chipsets and Market Trends for 2024

Dive into the world of AI chipsets, their functions, key manufacturers for 2024, and emerging market trends. Discover how these specialized hardware components drive AI performance, tackle industry challenges, and shape the future of technology.

TECH BLOGSAI

Introduction to AI Chipsets

AI chipsets are specialized hardware designed to execute artificial neural network (ANN)-based applications efficiently and effectively. Key features of these chipsets include strong processing power, low power consumption, ample memory, and fast data transport. These factors are crucial in determining the performance, cost, and scalability of AI applications.

Despite facing challenges such as data security concerns, lack of standardization, and a shortage of skilled AI professionals, the AI chipset market is anticipated to experience significant growth. This growth is driven by ongoing technological advancements and the increasing demand for AI-powered products and services.

AI chipsets, also known as artificial intelligence processors, are specialized hardware components engineered to expedite AI-related tasks. These tasks predominantly include machine learning (ML) and deep learning (DL) computations, which are pivotal in various applications such as natural language processing, image recognition, and autonomous driving. Unlike general-purpose processors, AI chipsets are tailored for high-efficiency and high-performance computing, enabling faster data processing and lower power consumption.

The evolution of AI chipsets can be traced back to the growing demand for more sophisticated and efficient ways to handle complex algorithms and large datasets. Initially, general-purpose CPUs were employed to manage AI workloads, but they quickly reached their limits in terms of speed and efficiency. This led to the development of Graphics Processing Units (GPUs), which offered a significant improvement due to their parallel processing capabilities. However, as AI applications continued to evolve, the need for even more specialized hardware became apparent, giving rise to dedicated AI chipsets.

Today, AI chipsets are integral to the technological landscape, driving advancements in a multitude of sectors. From enhancing the capabilities of smartphones and smart home devices to powering data centers and supporting cutting-edge research, these chipsets have become indispensable. They are designed to handle specific types of calculations required for AI models, leading to faster training times and more efficient inference processes. This specialization not only boosts performance but also allows for more innovative and complex AI solutions to be developed and deployed.

In essence, AI chipsets represent a critical component in the advancement of artificial intelligence technology. Their role in accelerating AI computations has made them a cornerstone in fields ranging from healthcare to finance, underscoring their importance in shaping the future of technology.

AI chipsets are specialized hardware designed to optimize the processing of complex algorithms, manage extensive datasets, and execute parallel computations with greater efficiency than traditional CPUs and GPUs. These advanced capabilities are crucial for the rapid advancements in artificial intelligence and machine learning technologies.

Optimized Processing of Complex Algorithms

One of the primary functions of AI chipsets is their ability to process intricate algorithms at high speeds. These chipsets are engineered to handle the sophisticated mathematical models and computations required in AI applications. For instance, deep learning models, which involve multiple layers of neural networks, benefit significantly from the parallel processing capabilities of AI chipsets. This optimized processing allows for faster training times and more accurate predictions, making AI systems more efficient and effective.

Handling Large Datasets

AI applications often require the analysis of massive amounts of data to identify patterns, make decisions, and predict outcomes. AI chipsets are designed to manage and process these large datasets efficiently. They incorporate high-bandwidth memory and advanced data management techniques to ensure that data can be accessed and processed quickly. This capability is particularly important in fields like genomics, where vast quantities of genetic data need to be analyzed to find correlations and insights.

Performing Parallel Computations

Parallel computation is a critical capability of AI chipsets, allowing them to perform multiple tasks simultaneously. This is achieved through architectures that support concurrent processing streams, such as tensor processing units (TPUs) and neural processing units (NPUs). Tasks like image recognition, where thousands of pixels need to be analyzed at once, and natural language processing, which involves understanding and generating human language, are significantly accelerated through these parallel processing capabilities.

AI chipsets excel in applications that require high computational power and efficiency. For example, in autonomous driving, AI chipsets process real-time data from sensors and cameras to make split-second decisions, ensuring safe navigation. Similarly, in healthcare, AI chipsets enable advanced diagnostic tools that can analyze medical images and patient data to provide accurate diagnoses and treatment recommendations.

NVIDIA

NVIDIA has maintained its position as a leader in the AI chipset market throughout 2024. Known for its powerful GPUs, NVIDIA has continued to innovate with its latest series of AI-focused chips, the A100 and the H100. These chipsets are designed to handle massive parallel processing tasks, making them ideal for AI training and inference. The company's focus on deep learning and machine learning has enabled it to secure partnerships with major tech firms and research institutions, driving advancements in autonomous vehicles, robotics, and other AI-driven technologies. Nvidia CEO Jensen Huang announced the new AI chip architecture, dubbed “Rubin,” ahead of the COMPUTEX tech conference in Taipei.

Intel

Intel has also solidified its leadership in the AI chipset sector with its Xeon processors and Movidius VPUs. In 2024, Intel introduced the Xeon Scalable Processor Line, which offers enhanced performance for AI workloads by integrating AI acceleration directly into the CPU. Additionally, Intel's acquisition of Habana Labs has allowed it to offer the Gaudi and Goya AI processors, which are optimized for deep learning training and inference, respectively. These innovations have made Intel a formidable player in the data center and edge AI markets.With the growing demand for deep learning and generative AI, the need for enhanced computing performance, efficiency, usability, and options has never been greater. Intel® Gaudi® AI accelerators and software are designed to provide new levels of computing advantages and choice for data center training and inference, whether in the cloud or on-premises. Intel aims to make the benefits of AI deep learning more accessible to enterprises and organizations, breaking down barriers to bring these advantages to a wider audience. Intel’s Xeon 6 processor is set to offer improved performance and power efficiency for high-intensity data center workloads, according to CEO Pat Gelsinger at the Computex tech conference in Taiwan.

AMD

AMD has made significant strides in the AI chipset industry with its Ryzen and EPYC processors. In 2024, the company launched the EPYC Milan-X series, which features advanced AI and machine learning capabilities. AMD's focus on high-performance computing and energy efficiency has allowed it to capture a considerable share of the AI chipset market. The company's collaborations with cloud service providers and enterprise customers have further bolstered its position as a key player in AI-driven applications.AMD is announcing a new Instinct accelerator, the HBM3E-equipped MI325X. Based on the same computational silicon as the company’s MI300X accelerator, the MI325X swaps out HBM3 memory for faster and denser HBM3E, allowing AMD to produce accelerators with up to 288GB of memory, and local memory bandwidths hitting 6TB/second. Meanwhile, AMD also showcased their first new CDNA architecture/Instinct product roadmap in two years, laying out their plans through 2026. Over the next two years AMD will be moving very quickly indeed, launching two new CDNA architectures and associated Instinct products in 2025 and 2026, respectively. The CDNA 4-powered MI350 series will be released in 2025, and that will be followed up by the even more ambitious MI400 series in 2026, which will be based on the CDNA "Next" architecture.

Qualcomm

Qualcomm has continued to innovate in the AI chipset space with its Snapdragon series. The Snapdragon 8 Gen 3, introduced in 2024, incorporates advanced AI processing units that enhance performance for mobile devices, IoT, and edge computing. Qualcomm's AI research and development have focused on improving on-device AI capabilities, enabling a range of applications from enhanced camera functionalities to real-time language translation. As a result, Qualcomm remains a dominant force in the mobile AI chipset market. Qualcomm has introduced the Snapdragon X Plus, a new chip in its Snapdragon X family for PCs. This chip is a streamlined version of the Snapdragon X Elite and is expected to power PCs launching in the coming months.

Key Features of the Snapdragon X Plus:

Performance and Efficiency: The Snapdragon X Plus features a 10-core Oryon CPU, delivering up to 37% faster CPU performance and up to 54% lower power consumption compared to its peers.

AI Capabilities: It includes a dedicated Qualcomm Neural Processing Unit (NPU) capable of 45 trillion operations-per-second (TOPS). This NPU is touted as the fastest in the world for laptops.

Advanced Use Cases: Qualcomm demonstrated the chip’s AI performance through applications like code generation in Visual Studio Code, music generation from text prompts in Audacity, and automatic language translation in live captions in OBS Studio.

According to Qualcomm’s senior vice president of compute and gaming, Kedar Kondap, the Snapdragon X Plus is designed to push the boundaries of mobile computing by offering leading CPU performance, AI capabilities, and power efficiency. PCs powered by this chipset, alongside the Snapdragon X Elite, are expected to debut in mid-2024.

Graphcore

Graphcore, a notable newcomer in the AI chipset industry, has gained attention with its IPU (Intelligence Processing Unit) technology. In 2024, Graphcore released the IPU-M2000, a powerful AI processor designed to accelerate machine learning tasks. The company's innovative architecture allows for efficient handling of complex AI models, making it a preferred choice for research labs and enterprises seeking cutting-edge AI solutions. Graphcore's rapid advancements and unique approach have positioned it as a significant disruptor in the AI chipset market. Graphcore has unveiled plans to build supercomputers capable of training "brain-scale" AIs, with neural networks comprising hundreds of trillions of parameters. Named “Good” in honor of British mathematician I.J. “Jack” Good, this computer will achieve over 10 exaflops—10 billion billion floating-point operations. The Good supercomputer will consist of 512 systems equipped with 8,192 IPUs, along with mass storage, CPUs, and networking. It will feature 4 petabytes of memory and bandwidth exceeding 10 petabytes per second. Graphcore estimates the cost of each supercomputer at around $120 million, with delivery expected in 2024.

AI Chipsets in Consumer Markets

AI chipsets have significantly permeated consumer markets, transforming the way everyday devices operate and interact with users. These advanced processors are now a fundamental component of various consumer electronics, enhancing functionality and user experience. In smartphones, AI chipsets enable features such as facial recognition, voice assistants, and enhanced camera capabilities. For instance, devices like the iPhone 13 and Google Pixel 6 leverage AI to deliver superior photo quality, improved battery management, and seamless user interface interactions.

In the realm of smart home devices, AI chipsets play a crucial role in optimizing performance and connectivity. Products such as Amazon Echo and Google Nest utilize AI to provide intuitive control over home environments, learning user preferences to deliver personalized experiences. These devices can automate tasks, recognize voice commands, and even predict user behavior, making home automation more efficient and user-friendly.

Personal assistants, both virtual and physical, also benefit from AI chipsets. Virtual assistants like Apple's Siri, Amazon's Alexa, and Microsoft’s Cortana rely on AI to process natural language, understand context, and deliver accurate responses. This capability is further extended in personal assistant robots such as the Sony Aibo, which uses AI to interact with users and perform tasks autonomously.

The integration of AI chipsets in consumer products brings numerous benefits, including enhanced processing power, improved energy efficiency, and the ability to handle complex algorithms in real time. End-users experience faster and more responsive devices, intuitive interfaces, and personalized services that adapt to their needs. As AI chipsets continue to evolve, their impact on consumer markets is expected to grow, driving innovation and transforming the landscape of consumer technology.

Combining AI with 5G technology is about to transform consumer electronics. 5G networks provide super-fast speeds and low delay, perfect for AI applications needing real-time data processing. With 5G, devices can share data faster, making AI features like augmented reality (AR), virtual reality (VR), and self-driving cars work smoothly. This will open up new opportunities for AI in consumer electronics, improving user experiences and driving innovation.

High-end specifications in tablets and smartphones are driving demand for AI chipsets in consumer electronics. Manufacturers are continuously releasing advanced AI chipsets to meet this growing demand. For instance, in May 2022, MediaTek unveiled its Genio architecture and Genio 1200 chip for AIoT devices, with commercial availability expected in the second half of 2022.

Additionally, the increasing use of analytical computations and complex problem-solving with quantum computing technology is boosting the AI chipsets market. Google's Sycamore quantum computer, for example, can perform tasks in about 200 seconds that the fastest conventional computers struggle with. Technologies like AI, machine learning, machine vision, big data, and augmented reality (AR) drive quantum computing advancements. As our understanding of quantum computing grows, the demand for AI chipsets will rise, further accelerating market growth.

AI Chipsets in Industrial and Enterprise Applications

AI chipsets are transforming various industrial and enterprise settings by enhancing productivity, improving decision-making, and driving innovation. In the healthcare industry, AI chipsets are being utilized to process vast amounts of medical data quickly and accurately, enabling faster diagnosis and personalized treatment plans. For instance, AI-powered imaging systems can detect abnormalities in medical scans with greater precision than traditional methods, thus accelerating the diagnostic process and improving patient outcomes.

In the finance sector, AI chipsets play a crucial role in fraud detection, algorithmic trading, and risk management. Financial institutions deploy AI algorithms to analyze transaction patterns in real-time, identifying potentially fraudulent activities with high accuracy. Moreover, AI-driven trading systems leverage chipsets to execute trades at speeds and efficiency levels unattainable by human traders, optimizing investment strategies and maximizing returns.

The automotive industry has also seen significant advancements with the integration of AI chipsets, particularly in autonomous driving technologies. These chipsets enable vehicles to process data from various sensors, such as cameras, radar, and LiDAR, to make real-time decisions. Companies like Tesla and Waymo are at the forefront, utilizing AI to develop self-driving cars that promise to revolutionize transportation by enhancing safety and reducing traffic congestion.

Manufacturing is another sector benefiting immensely from AI chipsets. Smart factories equipped with AI-powered robots and machinery can automate repetitive tasks, perform quality control, and predict maintenance needs, thereby reducing downtime and increasing efficiency. For example, Siemens has implemented AI chipsets in their manufacturing processes to optimize production lines and enhance overall operational efficiency.

A key trend is the growing integration of AI chipsets in edge devices, enabling faster and smarter data processing and decision-making at the source. AI is leveraging NVIDIA chipsets, known for high-performance computing and deep learning. Examples include industrial AI platforms using NVIDIA GPUs like Jetson AGX Orin and Jetson Orin Nano. Furthermore, the analysis covers other significant trends and technological advancements in the IoT semiconductor market. Data is taken from report https://iot-analytics.com/product/iot-chipset-iot-module-trends-report-2023/.These examples underscore the versatility and transformative potential of AI chipsets across various industries. By enabling businesses to solve complex problems and gain a competitive edge, AI chipsets are pivotal in driving the next wave of industrial and enterprise innovation.In 2024, the top enterprise technology priorities were cybersecurity, process automation, and IT software, expected to remain so in 2025. AI is predicted to rise to 4th place, with IoT staying 5th. IoT Analytics surveys senior IT decision-makers annually to understand their technology investment priorities.

Future Trends and Predictions for the AI Chipset Market

The AI chipset market is poised for significant evolution in the coming years, driven by rapid technological advancements and expanding applications across various sectors. One of the most notable trends is the continued miniaturization and enhanced performance of AI chipsets. As semiconductor technologies advance, we can anticipate AI chipsets becoming more power-efficient and capable of handling increasingly complex tasks, making them indispensable in both consumer electronics and industrial applications.

Moreover, the integration of AI chipsets in edge computing is expected to see substantial growth. Edge computing, which processes data closer to the source rather than relying on centralized data centers, benefits greatly from the low latency and real-time processing capabilities provided by advanced AI chipsets. This trend is likely to spur innovations in areas such as autonomous vehicles, Internet of Things (IoT) devices, and smart cities, where immediate data processing is critical.

Emerging applications of AI chipsets are also anticipated in healthcare, finance, and cybersecurity. In healthcare, AI chipsets can enhance diagnostic tools, patient monitoring systems, and personalized treatment plans. The finance sector can leverage these advancements for more efficient data analysis, fraud detection, and automated trading systems. In cybersecurity, AI chipsets will play a crucial role in real-time threat detection and response, bolstering defenses against increasingly sophisticated cyber threats.

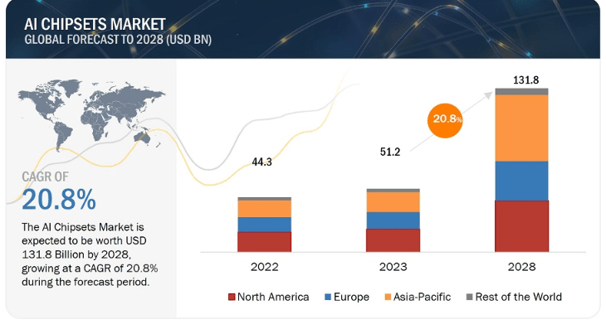

Source: www.marketsandmarkets.com

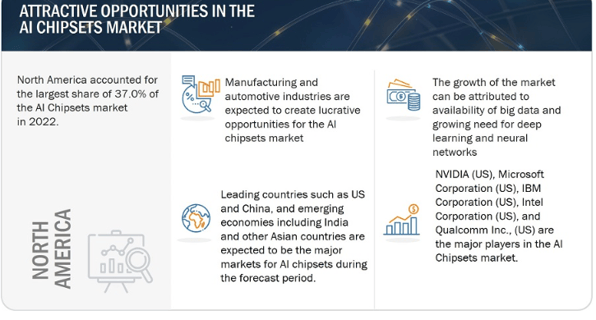

The AI chipset market was valued at $51.2 billion USD in 2023 and is estimated to reach $131.8 billion by 2028, registering a CAGR of 20.8% during the forecast period. The growth of the AI chipset market is driven by the increasing demand for data traffic and the need for high computing power, as well as the rising trend towards autonomous vehicles.

The competitive landscape of the AI chipset market is expected to evolve, with both established players and new entrants vying for market share. Companies that can innovate rapidly and adapt to changing technological demands will likely gain a competitive edge. However, challenges such as high development costs, supply chain constraints, and the need for robust intellectual property protection may pose significant hurdles.

Opportunities abound for companies that can address these challenges and develop cutting-edge AI chipsets that meet the growing demand for more intelligent and efficient systems. As the market matures, collaborations and partnerships between technology firms, research institutions, and industry stakeholders will be essential to drive innovation and ensure sustainable growth.